Generative AI is a type of artificial intelligence technology that can produce various types of content, including text, imagery, audio and synthetic data. The recent buzz around generative AI has been driven by the simplicity of new user interfaces for creating high-quality text, graphics and videos in a matter of seconds.

With all the hype we’ve seen around generative AI in the past year, it may seem like flashy new technology, but generative AI dates all the way back to the 1950s and 1960s. That being said, it wasn’t until 2014, with the introduction of a specific type of machine learning, generative adversarial networks (GANs), that generative AI applications were actually able to create novel content outputs.

The technology, it should be noted, is not brand-new. Generative AI was introduced in the 1960s in chatbots. But it was not until 2014, with the introduction of generative adversarial networks, or GANs -- a type of machine learning algorithm -- that generative AI could create convincingly authentic images, videos and audio of real people.

The rapid advances in so-called large language models (LLMs) -- i.e., models with billions or even trillions of parameters -- have opened a new era in which generative AI models can write engaging text, paint photorealistic images and even create somewhat entertaining sitcoms on the fly. Moreover, innovations in multimodal AI enable teams to generate content across multiple types of media, including text, graphics and video. This is the basis for tools like Dall-E that automatically create images from a text description or generate text captions from images

Generative AI has found applications in various fields, including art generation, text completion, image synthesis, and more. However, it also raises ethical considerations, such as the potential for generating misleading content or deepfakes

AI is often used as a blanket term to describe various advanced computer systems. While AI and generative AI are related, they refer to different aspects within the broader field of artificial intelligence. Some even refer to AI, generative AI, machine learning, and large language models (LLMs) synonymously, so it’s important to learn the differences. First, let’s differentiate AI from generative AI.

AI refers to the broader field of computer sciences pursuing the creation of intelligent machines that can perform tasks that would be typically performed by a human. AI can perform tasks like speech recognition, problem-solving, perception, and language understanding. It can also be designed to do more specific tasks, like diagnosing medical conditions or playing chess. There are a wide range of techniques used in AI, and this includes machine learning, natural language processing, and expert systems, for example. AI can analyze data and intelligently respond to what it sees, but it is limited to this function.

Generative AI, on the other hand, takes it a step further by using data to create content that’s entirely new and in various formats. Using machine learning models, generative AI produces original content based on patterns learned from existing data.

Both the fields of AI and generative AI continue to evolve and advance. Now, let’s go over some other terms you’ve likely seen floating around in relation to generative AI.

Machine learning is a subfield or method used in AI research. It involves the development of algorithms and models to enable computer systems to learn from data and make predictions or decisions. Machine learning systems learn from examples and adjust based on the data they’re exposed to, allowing it to improve its performance over time without being programmed to do so.

Large language models (LLMs) are the newest and least clearly-defined concept. A large language model, like OpenAI’s GPT-3, is a type of machine learning model that's trained on a large amount of text and specializes in text generation and understanding to generate natural-sounding replies.

Deep learning is a subfield of machine learning that focuses on training neural networks to handle more complex patterns than traditional machine learning.

Neural networks are inspired by the functioning of the human brain’s interconnected neurons. They learn by being given lots of examples and are used for various tasks like pattern recognition, classification, regression, and more. They consist of interconnected units that process and transform input data into meaningful outputs. They also learn from information over time.

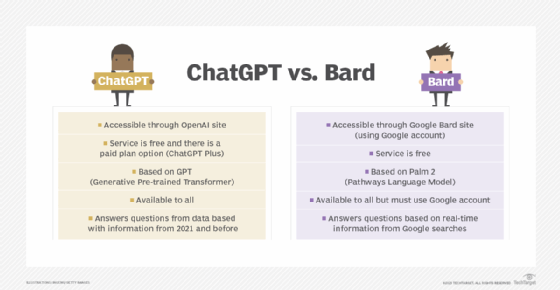

ChatGPT and Bard are popular generative AI interfaces.

ChatGPT. The AI-powered chatbot that took the world by storm in November 2022 was built on OpenAI's GPT-3.5 implementation. OpenAI has provided a way to interact and fine-tune text responses via a chat interface with interactive feedback. Earlier versions of GPT were only accessible via an API. GPT-4 was released March 14, 2023. ChatGPT incorporates the history of its conversation with a user into its results, simulating a real conversation. After the incredible popularity of the new GPT interface, Microsoft announced a significant new investment into OpenAI and integrated a version of GPT into its Bing search engine.

Bard. Google was another early leader in pioneering transformer AI techniques for processing language, proteins and other types of content. It open sourced some of these models for researchers. However, it never released a public interface for these models. Microsoft's decision to implement GPT into Bing drove Google to rush to market a public-facing chatbot, Google Bard, built on a lightweight version of its LaMDA family of large language models. Google suffered a significant loss in stock price following Bard's rushed debut after the language model incorrectly said the Webb telescope was the first to discover a planet in a foreign solar system. Meanwhile, Microsoft and ChatGPT implementations also lost face in their early outings due to inaccurate results and erratic behavior. Google has since unveiled a new version of Bard built on its most advanced LLM, PaLM 2, which allows Bard to be more efficient and visual in its response to user queries.

Google Bard is an advanced, AI-powered conversational chatbot. Bard is powered by a new generation of large language models (LLM)...

Learn MoreGenerative AI uses machine learning to analyze common patterns and arrangements in large sets of data, and then uses this information to generate new content similar to the existing data it's trained on. The more data or examples generative AI has to learn from, the more sophisticated it becomes.

Here’s a quick overview of the generative AI process:

Data collection and preprocessing: A dataset of relevant examples is collected — text, images, or any other type of data that the model is intended to generate. This data is preprocessed to ensure consistency and accuracy.

Model training: There are various models used in generative AI, chosen based on the nature of the data and the desired type of content generation. The most common models include:

Generative Adversarial Networks (GANs): This is a machine learning model where two neural networks are trained simultaneously, to learn and compete with each other, to become more accurate in their predictions.

Variational Autoencoder (VAEs): A VAE is an AI algorithm that encodes and decodes information. To do this, it maps large sets of information into smaller sets or representations. These smaller representations are called the latent space. The original information is hidden in the compressed representation in the latent space, which allows the VAE to decode — to reconstruct an image for example — by observing the latent space.

Autoregressive Model: Autoregressive models are used in machine learning to predict future behavior based on past behavior data.

Sampling from the model: Once the model is trained, you can start using it to generate new content. Provide a random input or seed to the model, and it will use its learned patterns to produce new data that follows similar characteristics as the training data.

Fine-Tuning and Exploration: Depending on the application, you might fine-tune the model's parameters to adjust the quality or style of the generated content. You can explore the model's capabilities by trying different inputs, altering parameters, and experimenting with the generated outputs.

Evaluation: Evaluate the generated content based on various criteria such as realism, coherence, relevance, and aesthetics. Iteratively improve the model based on feedback and evaluation results.